This is a hands-on follow up to the article Four Rules of Vibe Coding (Without the Chaos).

This guide dives deep to put those principles into practice, specifically in Cursor.

This guide is for developers or tech savvy product managers who want to build production-grade applications. It's designed for those building the first versions of a product or iterating on existing production codebases (alone or in a small team).

This isn't a guide for people who expect to type 3 sentences into a chat box and get a working application without understanding what the AI did for them. If you're serious about building something into production, you need to put in the work. The setup takes time, but it pays off massively in the end.

This guide was written based on:

- 600+ hours of vibe coding

- $3,000+ spent on tokens

- battle tested on several production applications: videosupport.io, craftnow.ai, aqualeads.co

You don't need to implement every recommendation here from day one. Start simple, and refer back to this guide when you hit walls or waste time on repetitive issues.

We've all been there...

We've all been there...

Use these links below to easily jump back to each section along your journey:

⚙️ Part 1: Basic Setup

🏗️ Part 2: Repository Setup

📝 Part 3: Writing Specifications

✂️ Part 4: Task Breakdown

🧩 Part 5: Context Window

🤝 Part 6: Human in The Loop

🧪 Part 7: Testing Gatekeepers

🐛 Part 8: Debugging

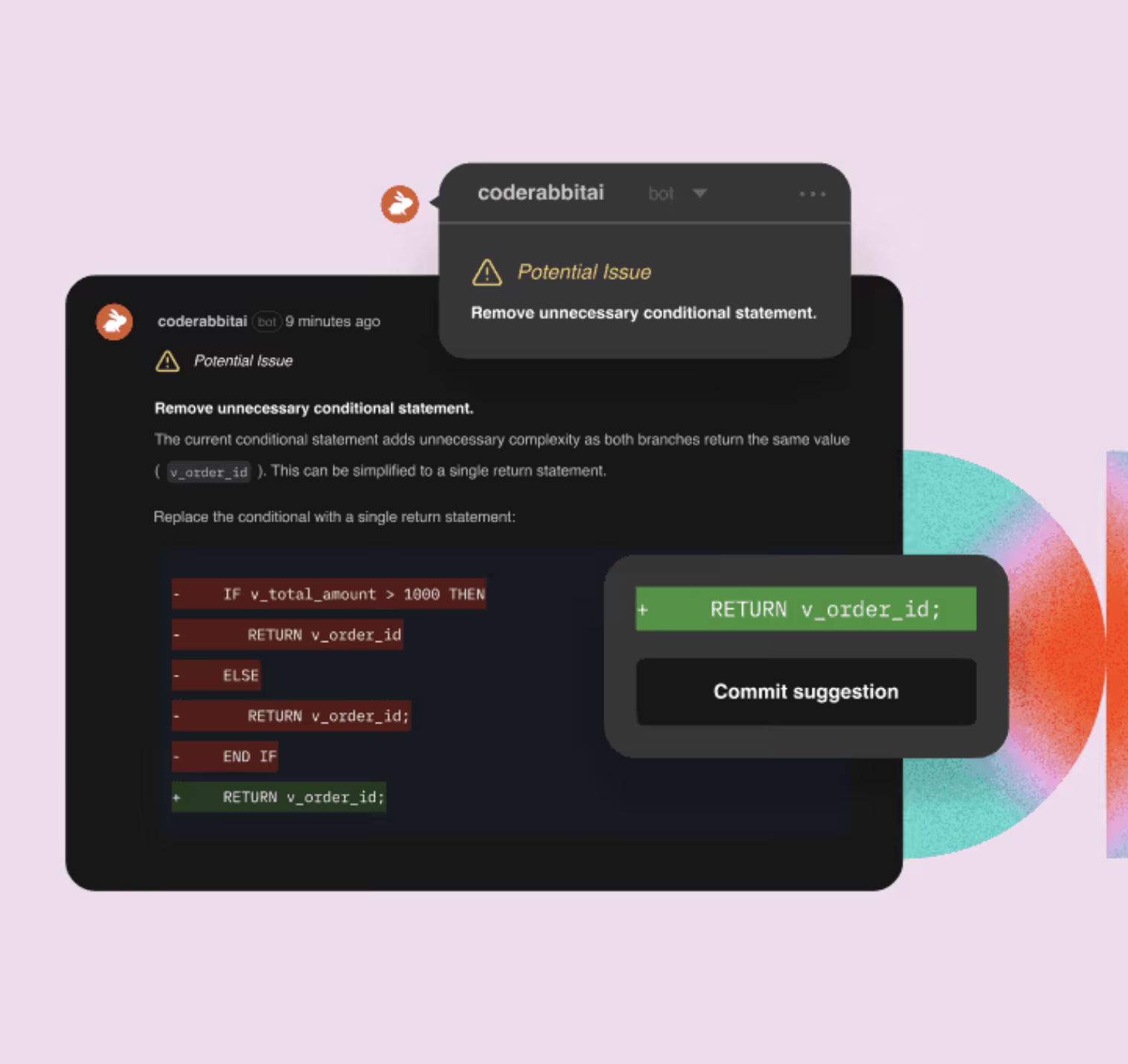

👀 Part 9: Code Reviews

📦 Part 10: Version Control

🚀 Part 11: Deployment

🔒 Part 12: Special Audits

⚡ Part 13: Tips and Tricks

📋 Your Cursor Vibe Coding Checklist

A Quick Note

You'll notice this guide has a lot of information and recommendations. It might feel like it kills the vibe, right? All this planning before Cursor writes a single line of code?

Here's the thing: You wouldn't hire a bridge engineer who says "I'll just vibe it."

Imagine asking someone to build a bridge across a river in your city. They show up and say, "I don't have plans, haven't really thought this through, no process, but don't worry, I'm just going to vibe it." You'd never drive on that bridge.

There's a difference between sketching a bridge on paper (which feels easy, almost playful) and building the actual structure that thousands of people will drive across every day. The same applies to code. Prototypes can be vibed. Production applications - the ones real users depend on, the ones that handle payments, store data, and need to work reliably - require intentional planning and a mix of specific skills.

Or maybe you'd prefer this...

📦 Templates

Across this guide, I will reference certain documents or prompts that I use in my setup. You can download all of them here. It contains:

-

Cursor Rules - Pre-configured .cursorrules files that you can drop in your project, including a self-improvement rule template for creating rules that learn from recurring mistakes.

-

Specification Documents - Product requirement templates, user story and acceptance criteria tracking, UX/UI specification sheets and more.

-

Agent Prompts - Prompts for the different agents used across our entire development flow (Product Manager, UX/UI designer, Software Architect, etc.)

Feel free to drop them into your project and refine them over time.

You'll notice those templates come in three formats:

.mdor.mdc- For instructions, prompts, and documentation (LLMs read these best).json- For structured data like configuration and lists (LLMs edit these reliably)

LLMs excel with markdown because it's plain text with clear structure, and JSON because it's predictable and parseable. Use .md files for any text-based instructions you want LLMs to understand, and .json for data that needs to be parsed or sorted. Don't mess around with other formats.

Let's get to it.

⚙️ Part 1: Cursor Basic Setup

The 4 Modes

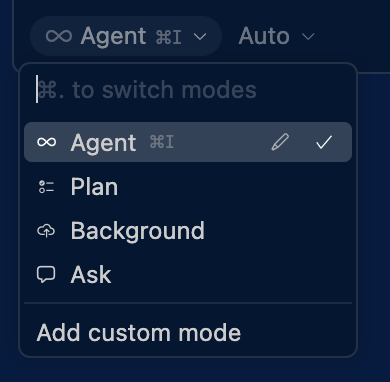

Cursor has four main modes for different workflows:

-

Ask Mode- Search and understand your codebase without making changes. Perfect for learning how code works before you modify it or get onboarded on a new codebase. -

Agent Mode- The AI autonomously explores, edits multiple files, runs commands, and fixes errors to complete complex tasks. -

Plan Mode- The AI creates structured plans by researching your codebase, asking clarifying questions, and generating a Markdown file with file paths and code references. You can review and edit the plan before building from it. Start planning by pressingShift + Tabin the agent input. -

Debug Mode- Instrument and investigate bugs with runtime evidence before applying fixes.

Cloud Agents (formerly Background Agents) let you run agents asynchronously in a remote environment while you keep coding locally.

This guide focuses primarily on Agent Mode and the planning workflow.

It's best to use Agent Mode to learn the fundamentals first, effective prompts, workflow patterns, and agent control. Once you're confident with Agent Mode's patterns and limitations, Cloud Agents become a powerful way to parallelize work. But without solid Agent Mode experience, you'll struggle to debug issues or guide the agents effectively. It's like using cruise control before you know how to drive your car.

Agent Tools Overview

Cursor agents have access to several core tools that work together to complete tasks:

Search Tools

Files- Find files based on their names for quick accessDirectory- Display directory contents and understand project structureCodebase- Perform semantic searches to locate relevant code snippets (a huge part of what makes Cursor so powerful)Grep- Execute regex-based text searches across files for specific patternsWeb- Conduct web searches to gather external informationFetch Rules- Retrieve predefined rules or guidelines for consistency (we explain this later)

Edit Tools

Edit & Reapply- Apply specific edits to files and reapply them as neededDelete File- Remove unnecessary or obsolete files from your project

Run Tools

Terminal- Execute terminal commands directly within the IDE

MCP (Model Context Protocol) Tools

Toggle MCP Servers- Manage connections to configured MCP servers for integrations with external services (we will cover that later on in the "Context Window" section)

Key Agent Settings

Cursor has a ton of settings. You will have plenty of time to figure them all out. Here are the few that matter most when getting started.

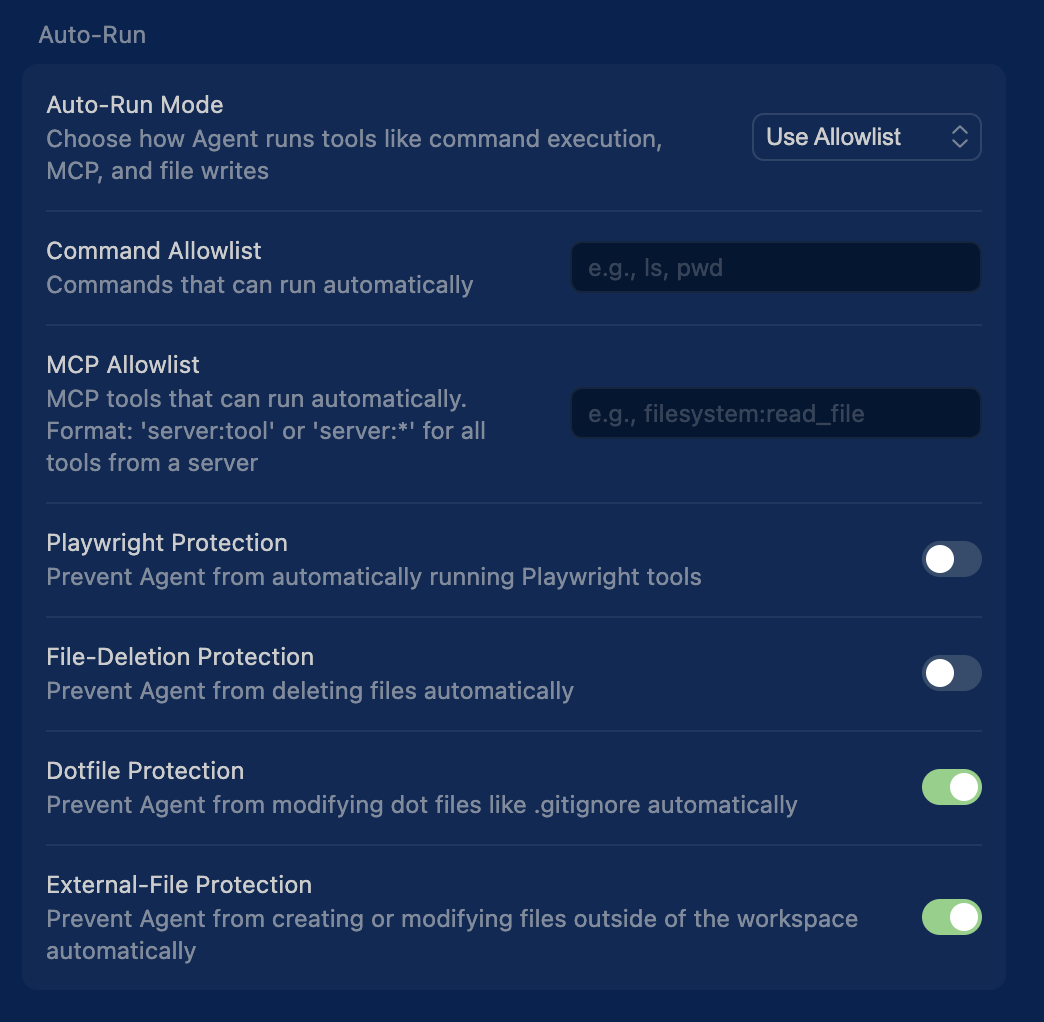

Auto-Run: You can configure which terminal commands the agent is allowed to run without your manual input while blocking others. Commands that are not in the Allowlist list will require your manual input to be ran. It is a good safety mechanism as terminal commands can be quite destructive.

Auto run title

Auto run title

If you're using GitHub for version control (you definitely should), you generally don't need to worry about file deletions. The agent might use rm commands, but you can always recover through version control. If you're still nervous, enable "File Deletion Protection" to get confirmation prompts for those risky file operations (it's usually cumbersome to recover from rm commands on your local machine).

For MCP server access (especially important in corporate environments where data sharing is a sensitive topic), you can use "MCP Tools Protection" to require approval before the agent calls any external services. This gives you full control over data sharing from third-party tools.

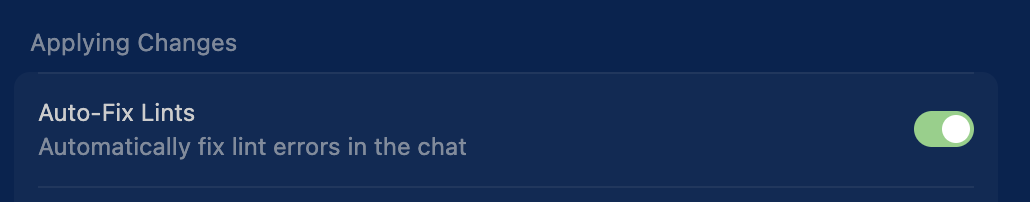

Applying Changes: Settings related the agent behavior post edits.

The "auto-fix lints" setting is usually ON by default, but verify it's enabled. The agent will check for lint errors at the end of each task and fix them automatically. It's a life saver. You'd catch these issues when running localhost anyway, but this saves you the headache.

Ignore Files

Cursor uses two types of ignore files to control what the AI can access:

.cursorignore - Completely blocks files from AI access (like .gitignore but for Cursor, it follows the same syntax). Use this for sensitive files such as environment files, credentials or API keys.

.cursorindexingignore - Excludes files from codebase search but still allows AI access. Good for large files that slow down searches such as .log, .cache or assets (images, videos, etc.).

Cursor already includes a pretty solid default list (see here).

It covers:

✅ Security: .env*, credential files, lock files.

✅ Large media: images, videos, audio, archives

✅ Build artifacts: node_modules/, .next/, .nuxt/

✅ Version control: .git/, .svn/, .hg/

✅ Fonts: .ttf, .otf, .woff, .woff2.

This probably covers 95% of the cases.

In the templates I created, you will find my file (in /setup/.cursorignore) that contains an extra scope. You can just copy paste it into your file.

Cursor Rules: Best Practices

Rules in Cursor provide persistent instructions that shape how Agent chat behaves in your project. Think of them as permanent context for coding standards and workflows. Note: User Rules are not applied to Inline Edit (Cmd/Ctrl + K).

Quick Start: If you want high-quality pre-written rules for your tech stack (Next.js, React, Tailwind, Laravel, Django, etc.), check out:

- Cursor Directory - Community-driven collection of cursor rules and prompts across 100+ frameworks and languages.

- Playbooks.com/rules - Battle-tested, production-ready cursor rules curated for specific languages and tasks.

Both provide excellent starting points that you can drop directly into your project and customize for your specific needs.

You will also find my own cursor rules in the templates.

In this guide, we'll focus on three practical rule layers: Project Rules, User Rules, and AGENTS.md. Cursor also supports Team Rules, and legacy .cursorrules is still supported.

1. Project Rules

Project rules live in .cursor/rules and are version-controlled with your codebase. Each rule is a separate .mdc file (MDC = Markdown with metadata) that can be configured to apply in different ways:

- Always - Included in every AI interaction

- Auto Attached - Triggered when specific file patterns (globs) are referenced

- Agent Requested - AI decides when to include them based on the description

- Manual - Only included when explicitly mentioned using

@ruleName

Use project rules for:

- Domain-specific knowledge about your codebase architecture

- Project-specific workflows and templates

- Framework conventions (React patterns, API structure, etc.)

- Code style and naming conventions specific to this project

📁 Click to see example structure

project/

.cursor/rules/ # Project-wide rules

backend/

.cursor/rules/ # Backend-specific rules

frontend/

.cursor/rules/ # Frontend-specific rulesYou can nest rules in subdirectories, and they'll automatically attach when files in those directories are referenced. This is really powerful because it allows you to reduce the context window whenever the agent is working on different parts of the codebase. It makes sense that testing best practices for instance aren't relevant to your agent when it's editing UI components, but frontend best practices definitely are.

2. User Rules

User rules are global preferences set in Cursor Settings → Rules that apply across all your projects in Agent chat. They're plain text and perfect for personal coding preferences that transcend any single project.

Use user rules for:

- Communication style preferences (concise vs. verbose)

- Personal coding conventions you always follow

- General development principles you apply everywhere

- Language or framework preferences

📝 Click to see example of user rules

Please reply in a concise style. Avoid unnecessary repetition or filler language.

Prioritize code readability over cleverness. Write self-documenting code.

Add inline comments only for complex logic. Avoid obvious comments.

Use meaningful variable and function names. Avoid abbreviations.

Write modular code with single responsibility principle.

Keep functions small and focused. Extract complex logic into helper functions.

Avoid premature optimization. Profile before optimizing.I provide templates for those rules (used in a Ruby on Rails / React codebase) that you can adapt to your own stack.

3. Agent Instructions (AGENTS.md)

AGENTS.md is a simpler alternative to .cursor/rules for straightforward projects. It's a plain markdown file placed in your project root (or subdirectories) without metadata or complex configurations.

Use AGENTS.md when:

- You want simple, readable instructions without MDC complexity

- Your project doesn't need conditional rule application

- You prefer a single file over multiple rule files

- You're starting fresh and want minimal setup

📝 Click to see example AGENTS.md

Project Instructions

Code Style

- Use TypeScript for all new files

- Prefer functional components in React

- Use snake_case for database columns

Architecture

- Follow the repository pattern

- Keep business logic in service layers

Best Practices for Writing Rules:

✅ Keep rules focused and actionable - Under 500 lines in total

✅ Provide concrete examples - Show, don't just tell

✅ Split large rules - Create multiple composable rules instead of one giant file

✅ Reference files - Link to templates or example code using @filename

✅ Avoid vague guidance - Write like clear internal documentation

✅ Reuse patterns - If you're repeating the same prompt, turn it into a rule

❌ Avoid generic advice - "Write clean code" means nothing and everything.

❌ Don't overload context - Too many rules slow down AI responses

Pro tip: Use the /Generate Cursor Rules command in chat to convert conversations into reusable rules when you've made decisions about agent behavior. This is a good way to compound learnings and avoid getting the same basic errors over and over.

Which Type Should You Use?

- Project Rules - For team-shared, version-controlled coding standards and architecture patterns specific to your codebase

- User Rules - For personal preferences that apply to everything you code across any stack

- AGENTS.md - For simple projects or when you want a single, easy-to-edit instruction file

For more details, see documentation here.

Self-Improving Cursor Rules

Here's the reality: every time you close a chat window or start a new conversation, all the knowledge from that session disappears. Every debugging insight, every fix, every pattern the AI learned... gone.

With a human developer, that knowledge compounds. They remember what went wrong last time and avoid it next time. But Cursor doesn't have that memory unless you give it one. That's where evolving rules come in. That's how you turn Cursor from a junior developer to a senior one over time.

The Rule of Thrice:

Don't rush to create rules after every error. Follow this simple pattern:

- Once → It's a fluke. Just fix it and move on.

- Twice → It's a coincidence. Note it down.

- Thrice → It's a pattern. Create a rule.

When you encounter the same error for the third time, that's your signal to codify the fix into a Cursor rule.

How to do it:

After fixing a recurring error, prompt Cursor:

We've encountered this [specific error] three times now.

Create a cursor rule that:

1. Documents what mistake was being made

2. Provides the correct approach

3. Includes a code example if relevant

Save it to .cursor/rules/[descriptive-name].mdcPro tip: I've included a self-improvement rule template in the templates folder (/setup/self-improvement-rule-template.mdc) that provides a structured format for these rules. This is one simple implementation. Some teams build more complex system but it's effective for capturing and preserving knowledge as you code.

Picking Your Model

The model selection settings in Cursor

The model selection settings in Cursor

Cursor supports major model providers out of the box. You can pick the model that best fits your task.

Model Selection Guide:

Here has been my experience so far (as of February 2026; this evolves quickly with each new launch):

-

Opus 4.6 - Excellent for code implementation and technical writing. Anthropic models consistently perform best at actual code generation, understanding complex requirements, and producing production-ready implementations.

-

GPT Codex 5.3 - Great for code review and debugging. Strong at analyzing existing code and suggesting improvements.

-

Gemini 3 Pro - Good for planning and problem-solving. Takes initiative and can handle broad refactoring tasks.

-

Grok 4.1 - Particularly strong for writing specifications and requirements. Its truth-seeking nature makes it excellent for the specification phase, asking clarifying questions, and thinking through edge cases systematically.

-

DeepSeek-V3.2 - Strong value/performance option for engineering-heavy tasks and iterative implementation loops.

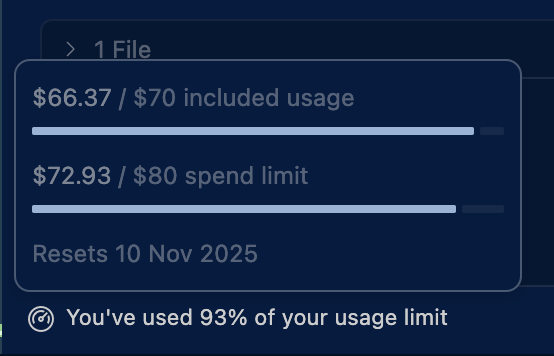

Auto Mode: with this switched ON, Cursor automatically selects the best model based on your prompt. Perfect for small edits and quick tasks. For complex features or specifications, manually select a model for better control. As far as I can tell,

Auto Mode will also help you save money on most small tasks because you don't need a very powerful model for simple edits. The rule of thumb: if the task requires a large context window or looking into many different files, Auto Mode might not be the best choice. But for focused edits like UI changes, method refactoring, or small code modifications in one specific area, Auto Mode works perfectly and keeps costs down.

When to Use Each:

- Small edits/quick fixes → Auto Mode

- Writing specifications → Grok 4.1 (spec phase) or Opus 4.6 (technical specs)

- New feature implementation → Opus 4.6 (best for actual code generation)

- Code review → GPT Codex 5.3 or Opus 4.6

- Complex debugging → Opus 4.6 or GPT Codex 5.3

- Planning and problem-solving → Gemini 3 Pro or Grok 4.1 (for requirement exploration)

You can also use your own API keys for officially supported providers in Cursor settings: OpenAI, Anthropic, Google, Azure OpenAI, and AWS Bedrock. See the official docs here.

Note: Since I'm French (🥖 🐓), I'm also particularly interested in exploring Mistral AI models in my workflow. I'll be writing about how to properly integrate Mistral models with Cursor in a future article.

🏗️ Part 2: Repository Setup

Unlike tools like Lovable or V0 that come with predefined stacks, Cursor gives you complete freedom to choose. You can use any stack you want, but this means you need to make several important decisions upfront.

When setting up your repository, you need to answer these critical questions (I'll use a web application as an example):

Key choices:

- Front-end framework - React, Vue, Svelte, or vanilla JS?

- Back-end framework - Next.js, Express, FastAPI, Django, Ruby on Rails or serverless functions?

- Database choice - PostgreSQL, MySQL, MongoDB, or other NoSQL solutions?

- Backend-as-a-Service (BaaS) - Will you use Supabase (includes auth, storage, database, and more) or build a custom backend? I highly recommend Supabase if you want to skip a lot of headaches in the early days.

- UI library - Tailwind CSS, shadcn/ui, Material-UI, Chakra UI, or custom CSS? (I highly recommend Tailwind CSS + shadcn/ui for rapid development)

- Analytics platform - PostHog, Mixpanel, Amplitude, or database-only tracking? Set this up from day one, you can't analyze what you don't track.

If not using a BaaS:

- Authentication system - Auth0, Firebase Auth, Clerk, or custom JWT implementation?

- Storage solution - AWS S3, Cloudinary, UploadThing, or self-hosted storage?

- File handling - How will you manage file uploads, transformations, and media delivery?

Development Setup:

- Testing frameworks - Jest, Vitest, Playwright, or Cypress for frontend/backend testing?

- Package manager - npm, pnpm, or yarn? (if using a Node backend)

- ORM/Database toolkit - Prisma, TypeORM, Drizzle, Sequelize, or raw SQL?

- Deployment strategy - Vercel, Netlify, Railway, AWS, or self-hosted infrastructure?

- Environment management - How will you handle dev/staging/production configurations?

- CI/CD pipeline - GitHub Actions, GitLab CI, CircleCI, or other automation?

Pro Tip: The LLM can help you think through all these questions and make informed decisions based on your project requirements, constraints, and long-term goals. Don't hesitate to ask for recommendations and tradeoffs between different options.

⚠️ Don't Skip Analytics

Analytics might feel premature when you're just starting, but here's the reality: the whole point of shipping features is to learn from real user behavior. If analytics is an afterthought, your ability to make data-driven decisions becomes an afterthought too. By the time you realize you need to track something, you've already lost weeks or months of valuable data.

Two Recommended Stacks:

In the end, the best stack is the one you master. If you don't have much experience with any stack, below are two stacks that I've been playing around with and got good results:

-

Modern Startup Stack: Next.js + React + Supabase

- Tons of features out-of-the-box from Supabase (auth, database, storage, real-time)

- Just make API calls, no backend complexity

- Popular choice for new projects and startups

- Great TypeScript support and modern tooling

- Easy deployment with Vercel integration

-

Old School Meets New School: Ruby on Rails + React with Inertia.js

- No API layer needed, Inertia.js handles the bridge between Rails and React

- Rails comes "batteries included" with sensible defaults

- "Convention over configuration" nature of Rails means fewer technical choices to be made by the LLM

- LLMs converge faster on solutions due to Rails conventions

- Decades of training data for both Rails and React

- Ruby is clean and token-efficient for LLMs

- React gives you modern frontend capabilities that Rails lacks

- Ruby on Rails continues to position itself as the "One Person Framework"

Pro tip: Once you've completed your repository setup, save it as a GitHub template repository. You don't want to repeat this work for every new project.

📝 Part 3: Writing Specifications

Alright, Cursor is setup, your repository is setup. Now the real work starts.

This is where you MUST engage in a questioning process with the LLM before any code is written. This is also where many of the insights transcend Cursor specifically, much of what follows applies to any AI coding tool.

Stop. Think. Then Build.

Just like good engineers pause to think before writing code, you should intentionally refrain from writing any code during this specification phase. Complex features always start with a good plain English description of what you're trying to accomplish. This specification process can take a bit of time and feel overkill but it's critical. You'll save countless hours down the line. I promise.

Intent is the Source of Truth

In lazy vibe coding, code becomes the source of truth. You iterate directly on code with the AI, tweaking and adjusting until something works, without clear intent documented anywhere. This is exactly what we want to avoid. Instead, intent should be your source of truth. Your specification determines what gets built. The code is generated from that intent, not the other way around. This is the core principle behind spec-driven development, where specifications become executable. The AI reads your intent and produces working code. When specifications are clear, you can iterate on implementations, try different technical approaches, or regenerate solutions without rewriting your requirements. The spec stays stable; the "how" can vary.

Why Vague Prompts Fail

Here's the reality: LLMs are exceptional at pattern completion, but not at mind reading. When you give a vague prompt like "add task assignment to my app," the AI has to guess at potentially thousands of unstated requirements. How do users select assignees? What permissions exist? Should there be notifications? What happens during reassignment? Every vague requirement multiplies possible interpretations exponentially. The AI will make some reasonable assumptions, and many will inevitably be wrong. You won't discover which ones until deep into implementation. Clear specifications reduce the solution space from thousands of possibilities to one clear path. That's why we specify first, then build.

The Specification Spectrum: Finding the Right Balance

Here's what I've learned: there's a spectrum between lazy prompting and full-blown specification documents. On one end, you have "build me a feature" with zero context—the AI fills in too many blanks with assumptions that don't match your needs. On the other end, you have complete Agile documentation with user stories, personas, epics, acceptance criteria for everything, and exhaustive data models that bloat your context window and cause "context rot."

Neither extreme works well with LLMs.

The sweet spot is somewhere in between: define sufficiently to provide the context required for the LLM, but not so much that you overwhelm it with irrelevant information. LLMs don't need to know about personas or business alignment the way human teams do. They need enough context to write good code, but not so much ceremony that the important details get lost in the noise.

Three methodologies have influenced my thinking here:

1. Linear's Approach - The issue tracking tool doesn't believe in user stories. They advocate for simply defining what you're building without the ceremony. Direct, clear, focused.

2. Jobs To Be Done (JTBD) - This framework emphasizes job stories over user stories. Job stories focus on what the feature does rather than who it's for. Knowing "who" it's for is a business question that helps humans align, but it's not necessarily relevant for writing good code.

3. Shape Up - From 37signals (creators of Basecamp and Ruby on Rails), this methodology introduces concepts like "no-gos" (explicit boundaries) and "rabbit holes" (risks to avoid). It's just as important to tell the LLM what NOT to do as it is to tell it what to do.

Two Ways LLMs Go Off the Rails:

-

They build functionalities you never asked for - Based on training data, they assume certain features should exist a certain way. If you're building a todo app, they might add categories, priorities, and due dates even if you just wanted a simple list.

-

They over-engineer the implementation - They reach for complex patterns, add unnecessary abstractions, or build elaborate systems when a simple solution would work.

The way to prevent this is with explicit no-gos and rabbit holes. Create guardrails by being clear about what you don't want: "Don't add authentication," "Don't create a separate API layer," "Keep the data model simple with just a single table."

Requirement Subtraction: Question Everything

Before diving into the workflow, I've found Elon Musk's first principle of engineering quite useful here: Make the requirements less dumb.

He famously said...

The requirements are definitely dumb; it does not matter who gave them to you. It's particularly dangerous if a smart person gave you the requirements, because you might not question them enough.

— Elon Musk —

His rule: If you're not occasionally adding things back in, you're not deleting enough.

(You can find the short video here)

When working with AI on specifications:

✅ Question every requirement - Does this feature actually solve the user's problem, or is it just "nice to have"?

✅ Kill scope aggressively - Remove features that don't directly serve your core value proposition

✅ Default to "no" - Every feature you add is code you must maintain, test, and debug

✅ Remove first, optimize later - Deletion is always faster and safer than building

Pro tip: During the Product Manager phase (covered below), explicitly ask: "What can we remove from this feature while still solving the core problem?". The best code is the code you never write. The best feature is the one you don't build because you found a simpler solution.

The Squad Approach

Instead of throwing vague feature requests at the AI and hoping for the best, we're going to use a structured, multi-agent workflow. Think of it as assembling a specialized team (similar to a squad in startups) where each AI agent plays a specific role:

- 📋 Product Manager

- 🎨 UX/UI Designer

- 🏗️ Software Architect

Squads are essentially small navy seal teams of domain experts that come together to ship features fast. That's exactly what you want with your vibe coding setup.

Each agent asks targeted questions in their domain, builds on the previous agent's work, and produces specific deliverables. By the end of this process, you'll have comprehensive specifications that any developer (human or AI) can implement with confidence.

Quick Start with Cursor Plan Mode

Before diving into the comprehensive workflow below, it's worth mentioning that Cursor recently shipped Plan Mode, which provides a lightweight alternative to the detailed specification process outlined in this guide.

Plan Mode (activated by pressing Shift + Tab in the agent input) will:

- Research your codebase to find relevant files

- Ask clarifying questions about your requirements

- Generate a Markdown plan with file paths and code references

- Allow you to edit the plan before building

When to Use Plan Mode vs. The Full Workflow:

Plan Mode is a good starting point if you want to move fast without investing much time in planning. However, it has limitations compared to the comprehensive approach I recommend:

- Limited questioning scope - Usually asks 4-5 questions instead of the more thorough exploration needed for complex features

- Mixed concerns - Combines behavioral and technical questions without separating UX/UI considerations

- Assumes more - Doesn't go through design details, technical choices, or non-functional requirements systematically

- No specialized expertise - Uses a single general agent rather than domain-specific Product Manager, UX/UI Designer, and Software Architect perspectives

Think of Plan Mode as a very lightweight version of the workflow below. For simple features or when you're just experimenting, Plan Mode works well. For complex features where quality, maintainability, and meeting precise requirements matter, I believe the workflow suggested below works best.

My recommendation: If you're new to vibe coding or working on a complex feature, start with Plan Mode to test the waters and get a feel for the questioning process. Then, when you're ready for higher quality specifications, adopt the comprehensive workflow below.

The Three-Agent Workflow

The specification process I recommend uses 3 specialized agents working sequentially:

- 📋 Product Manager - Defines WHAT to build and WHY

- 🎨 UX/UI Designer - Defines HOW users interact with it

- 🏗️ Software Architect - Defines HOW to build it technically

Each agent produces specific deliverables that the next agent builds upon.

Note: you are free to design your own workflow for your own needs. This 3 agent workflow simply follows how most squads tend to work within startups that ship fast.

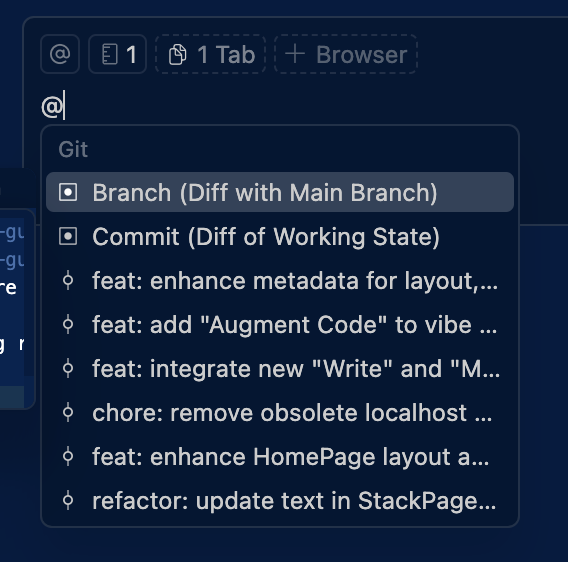

Pro tip: Store your agent prompts (Product Manager, UX/UI Designer, Software Architect) as Cursor Commands in .cursor/commands/ (e.g., /pm, /ux, /architect). This lets you trigger each agent with / syntax instead of copy-pasting prompts every time.

Complete Workflow Overview

Before we dive into the details of each agent, here's how the entire process flows:

Feature Request

↓

┌───────────────-─────────┐

│ 📋 Product Manager │

│ ──────────────────── │

│ • Ask strategic Qs │

│ • Generate PRD │

│ • Write job stories │

│ • Define no-gos │

│ • Identify rabbit holes│

│ • Edge case criteria │

└──────────────-──────────┘

↓

┌────────────────────────┐

│ 🎨 UX/UI Designer │

│ ──────────────────── │

│ • Ask interaction Qs │

│ • Breadboard flows │

│ • Screen inventory │

│ • ASCII wireframes │

└────────────────────────┘

↓

┌────────────────────────┐

│ 🏗️ Architect │

│ ──────────────────── │

│ • Ask technical Qs │

│ • Architecture spec │

│ • Technology choices │

│ • Implementation risks│

└────────────────────────┘

↓

Complete SpecificationThe Process:

-

📋 Product Manager asks strategic questions about business requirements, feature behavior, and edge cases. Then generates a Product Requirements Document (PRD) with job stories (not user stories), no-gos, rabbit holes, and acceptance criteria only for critical edge cases.

-

🎨 UX/UI Designer asks questions about user interactions, creates breadboards to map out flows at the right level of abstraction, builds a screen inventory (what to add/edit/delete), and produces ASCII wireframes when visual detail is needed.

-

🏗️ Software Architect asks technical questions about non-functional requirements, technical constraints, performance, security, and infrastructure. Creates a comprehensive architecture specification with technology recommendations and identifies implementation risks.

File Structure:

I recommend creating a specifications folder at the root of your project. This folder will contain all your prompts for all your agents (inside a agents folder, unless you use Cursor's commands) as well as all the outputs of your specs inside a features folder.

You can find an example of such folder (as I use it in my workflow) in the templates I provide [here].(#templates)

Here's a suggested structure you can adapt based on your preferences:

specifications/

├── agents/ # Agent prompts (if not using Cursor commands)

│ ├── product_manager.md

│ ├── ux_designer.md

│ └── architect.md

│

└── features/

└── task-assignment/ # One folder per feature

├── product_requirements_document.md # Product specs (job stories, no-gos, rabbit holes, edge cases)

├── design_document.md # Design specs (breadboards, screen inventory, flows)

├── architecture_document.md # Technical specs (architecture, tech choices, risks)

│

├── pm/

│ └── pm_questions.json

│

├── designer/

│ ├── designer_questions.json

│ └── wireframes/ # ASCII wireframes (when needed)

│ ├── task-list.txt

│ └── assign-modal.txt

│

└── architect/

└── architect_questions.jsonKey Points:

- Each feature gets its own folder

- Three main documents: PRD (product), Design Doc (UX/UI), Architecture Doc (technical)

- Questions generated by LLMs and answered by the human (you) are saved as JSONs for reference

- Wireframes are separate files only when visual detail is needed

- All files are text-based (Markdown/JSON) for easy version control and LLM parsing

Phase 1: Product Manager

The Product Manager (PM) agent's job is to understand the what and why of the feature from a product perspective. This agent asks strategic questions about business requirements, user needs, and edge cases.

Step 1: Initial Questions

The PM starts by asking questions to understand:

- Core problem: What problem are we solving?

- Appetite: How much time is this worth? (Borrowed from Shape Up - sets time constraints upfront)

- Job to be done: What is the user trying to accomplish?

- Scope boundaries: What's in scope and what's explicitly out?

- Edge cases: What unusual scenarios need handling?

- Success criteria: How do we know this worked?

Example questions the PM might ask:

1. What problem does task assignment solve for your users?

2. How much time is this feature worth? (Small batch: 1-2 weeks, Big batch: 6 weeks)

3. When would a user need to assign a task to someone?

4. Can a task be assigned to multiple people?

5. What happens if you assign a task to someone who doesn't have access?

6. Should there be notifications when tasks are assigned/reassigned?

7. Can users reassign tasks, or only the original assignor?

8. What's explicitly NOT part of this feature? (e.g., no task ownership transfer, no delegation chains)These questions force you to think through the feature systematically. The PM captures your answers and uses them to generate the specification.

Step 2: Generate Job Stories (Not User Stories)

Instead of traditional user stories with personas ("As a project manager, I want to..."), the PM generates job stories that focus on the situation, motivation, and outcome:

Format: When [situation], I want to [motivation], so I can [expected outcome].

Why job stories over user stories?

- They focus on what the feature does, not who it's for

- LLMs don't need personas or business context that's relevant for human alignment

- They're more direct and concrete for AI implementation

- Companies like Intercom and Basecamp successfully use this approach

Example job stories in the PRD:

Job Stories

JS-001: Assign task to team member

When I'm looking at a task that needs someone else to handle it I want to assign it to a team member So I can ensure they know it's their responsibility and I can track who's working on what

JS-002: Notification on assignment

When I assign a task to someone I want to them to receive a notification So they're immediately aware of their new responsibility

JS-003: View my assigned tasks

When I'm viewing my task list I want to see which tasks are assigned to me So I can know what I'm responsible for completing

These job stories drive the implementation without the ceremony of full user stories with acceptance criteria for every single interaction.

Step 3: Define No-Gos and Rabbit Holes

This is critical and often overlooked. The PM explicitly documents:

No-Gos: Features or functionality we're intentionally NOT building to fit the appetite and stay focused.

Rabbit Holes: Risks and implementation traps that could derail the project or cause over-engineering.

Example no-gos:

No-Gos

These are explicitly out of scope:

- No task ownership transfer (assigning doesn't transfer creation rights)

- No delegation chains (can't assign a task that's already assigned to you)

- No batch assignment (assign multiple tasks at once)

- No assignment history/audit log

- No "assign to team" (only to individuals)

Example rabbit holes:

Rabbit Holes

Watch out for these implementation traps:

-

Permission system complexity - Don't build a complex role-based permission system. Keep it simple: if you can see a task, you can assign it. More granular permissions are a v2 feature.

-

Real-time synchronization - Don't add websockets or real-time updates. Standard HTTP polling or page refresh is fine for v1.

-

Notification preferences - Don't build a notification preference system (email vs in-app vs both). Everyone gets email notifications, period. Preferences come later.

-

Assignment workflows - Don't create multi-step assignment workflows (request → approve → assign). Direct assignment only.

-

Data model over-engineering - Don't create separate tables for assignments, assigners, assignment history. Just add an

assigned_tofield to the tasks table.

By explicitly stating these boundaries and risks, you prevent the LLM from going down paths that waste time or add unnecessary complexity.

Step 4: Acceptance Criteria (Only for Critical Edge Cases)

Unlike traditional Agile where you write acceptance criteria for everything, we only document criteria for critical, non-obvious edge cases that could cause problems if implemented wrong.

These are typically:

- Payment/billing logic

- Security flows

- Data integrity issues

- Complex state transitions

When to write acceptance criteria:

- ✅ Does failure cost money? (payments, billing, refunds)

- ✅ Does failure lock someone out? (authentication, authorization)

- ✅ Does failure lose data? (exports, backups, critical writes)

- ✅ Does failure break integrations? (API calls, webhooks)

- ❌ Simple UI interactions (these don't need criteria)

Example acceptance criteria using Given/When/Then (Gherkin syntax):

Scenario: Cannot assign task to user outside project

Given I have a task in "Project Alpha"

And user "john@example.com" is not a member of "Project Alpha"

When I attempt to assign the task to "john@example.com"

Then the assignment fails

And I see an error: "Cannot assign to users outside this project"

And the task remains unassigned

Scenario: Reassignment notifies new assignee only

Given task #123 is assigned to "alice@example.com"

When I reassign task #123 to "bob@example.com"

Then "bob@example.com" receives an assignment notification

And "alice@example.com" receives a notification that task was reassigned

And the previous assignee "alice@example.com" is recorded in the taskThese acceptance criteria translate directly into test cases, which is why we use Given/When/Then format (it maps to Behavior Driven Development and the Gherkin language).

Deliverables:

pm_questions.json- Questions and your answers (saved in pm/ folder)product_requirements_document.md- Contains problem statement, appetite, job stories, no-gos, rabbit holes, and edge case acceptance criteria (in feature root folder)

Phase 2: UX/UI Designer

The Designer agent focuses on the how of user interaction. This agent asks questions about screens, flows, and interactions, then creates breadboards and wireframes.

Step 1: Designer Questions

The designer asks questions to understand:

- User flows: How do users move through this feature?

- Screen modifications: What existing screens need changes?

- New screens: What needs to be built from scratch?

- Interactions: What can users do on each screen?

- States: What different states does the UI have? (loading, error, success, empty)

Example questions:

1. Where in the existing UI does task assignment happen?

2. Is assignment inline on the task list, or in a modal/separate page?

3. How does the user select who to assign to? (dropdown, autocomplete, modal with search?)

4. Should assigned tasks look visually different from unassigned ones?

5. Where do users see tasks assigned to them?

6. What happens visually when assignment succeeds? (toast notification, visual update?)

7. How are assignment errors displayed to the user?Step 2: Breadboarding (Shape Up Technique)

Before diving into visual details, use breadboarding borrowed from electrical engineering. An electrical breadboard has all the components and wiring of a real device but no industrial design. Similarly, sketch out your interface with words first, not pictures.

A software breadboard has three key components:

- Places - Locations users navigate to (screens, dialogs, modals, menus)

- Affordances - Things users can interact with (buttons, fields, links, dropdowns)

- Connection lines - How affordances move users between places

Why breadboard first?

- Stays at the right level of abstraction (concrete but not detailed)

- Prevents premature design decisions

- Lets you think about logic and flow without visual distractions

- Gives the LLM clear structure without over-constraining design choices

Example breadboard in the design document:

Task Assignment Flow - Breadboard

[Task List Page]

- Each task shows:

- Task title

- Current assignee (if any)

- "Assign" button

- "Assign" button → [Assignment Modal]

[Assignment Modal]

- User search/select dropdown

- Current assignee display (if exists)

- "Assign" button → [Task List Page] (with success toast)

- "Cancel" link → [Task List Page]

[Task List Page - After Assignment]

- Shows success toast: "Task assigned to [name]"

- Updated task shows new assignee

- Auto-dismisses after 3 seconds

[Error States]

- Invalid user → Show error in modal: "User not found"

- Permission denied → Show error in modal: "You don't have permission to assign"

- Network failure → Show error in modal: "Assignment failed. Try again."

This breadboard captures the complete flow without making decisions about colors, exact positioning, typography, or detailed layouts. The LLM understands what needs to exist and how it connects.

Step 3: Screen Inventory

Create an inventory of what screens/components need to be created, modified, or deleted:

Example screen inventory in the design document:

Screen Inventory

New Screens

Assignment Modal

- Modal dialog for assigning tasks to team members

- Wireframe:

wireframes/assignment-modal.txt

Modified Screens

Task List Page

- Changes: Add 'Assign' button to each task, display current assignee

- Wireframe:

wireframes/task-list-updated.txt

Task Detail Page

- Changes: Show assignee info, add inline assign/reassign option

- Wireframe:

wireframes/task-detail-updated.txt

Deleted Screens

None

Step 4: ASCII Wireframes (When Needed)

After breadboarding, if you need to get more specific about layouts, create ASCII wireframes. These are text-based layouts that:

- LLMs can easily parse and reference

- Use fewer tokens than visual formats

- Let you iterate in seconds

- Provide just enough visual detail without over-constraining

Use ASCII wireframes for screens where layout matters, but skip them when the breadboard is clear enough.

This aligns with the "fat marker sketch" concept from Shape Up: broad enough that adding excessive detail is difficult.

Example ASCII wireframe:

┌─────────────────────────────────────────────┐

│ [X] Task Assignment │

│ │

│ Assign task: "Fix login bug" │

│ ───────────────────────────── │

│ │

│ Currently assigned to: Alice Johnson │

│ │

│ Assign to: │

│ ┌─────────────────────────────────────┐ │

│ │ Bob Smith ▼ │ │

│ └─────────────────────────────────────┘ │

│ │

│ Recent assignees: │

│ • Bob Smith │

│ • Carol White │

│ • David Lee │

│ │

│ [Assign Task] [Cancel] │

│ │

└─────────────────────────────────────────────┘Pro tip: Only create wireframes when layout decisions actually matter. Don't wireframe every single screen—trust the breadboards for simple screens and only add visual detail where it's critical to understanding.

Deliverables:

designer_questions.json- Questions and answers (saved in designer/ folder)design_document.md- Contains breadboards, screen inventory, and references to wireframes (in feature root folder)- ASCII wireframes in

wireframes/folder (only when visual detail is needed)

Phase 3: Software Architect

The Architect agent focuses on the how of technical implementation. This agent asks questions about architecture, technology choices, and non-functional requirements.

Step 1: Technical Questions

The architect asks questions to understand:

- Data model: What tables/collections/entities are needed?

- API design: What endpoints are required?

- Performance: Are there scalability concerns?

- Security: What security considerations exist?

- Integration: How does this interact with existing systems?

- Non-functional requirements: Reliability, monitoring, error handling

Example questions:

1. Where should assignment data be stored? (Add field to tasks table or separate assignments table?)

2. Should we support assigning tasks to deleted/inactive users?

3. What's the expected assignment volume? (impacts whether we need optimization)

4. Should assignment be idempotent? (can you assign the same person twice?)

5. How should we handle notifications? (synchronous or async queue?)

6. Do we need to track assignment history for analytics?

7. What existing authentication/authorization system should we integrate with?

8. Should the API support batch assignments for future use?Step 2: Architecture Specification

The architect creates a comprehensive specification covering:

Data Model:

Data Model Changes

tasks table (modify)

Add columns:

assigned_to(uuid, nullable, foreign key to users.id)assigned_at(timestamp, nullable)assigned_by(uuid, nullable, foreign key to users.id)

Indexes:

idx_tasks_assigned_toon assigned_to (for filtering user's tasks)idx_tasks_assigned_aton assigned_at (for sorting by assignment date)

No separate assignments table needed for v1. Keep it simple.

API Endpoints:

API Endpoints

POST /api/tasks/:id/assign

Assigns a task to a user.

Request: { "assigned_to": "user-uuid" }

Response (200 OK): { "task_id": "task-uuid", "assigned_to": "user-uuid", "assigned_at": "2024-01-15T10:30:00Z" }

Errors:

- 404: Task not found

- 403: User doesn't have permission to assign

- 400: Assigned user not in project

Technology Choices in the architecture document:

The architect presents options for key technical decisions in simple markdown format:

Technology Choices

Email Notifications

We need to send email notifications when tasks are assigned.

Option 1: SendGrid (Recommended) ✓

- ✅ Easy setup with good documentation

- ✅ Generous free tier (100 emails/day)

- ✅ Good deliverability rates

- ✅ Simple API

- ❌ Can be expensive at scale ($15/month for 40k emails)

- Decision: Good enough for v1. We can switch later if needed.

Option 2: AWS SES

- ✅ Very cheap ($0.10 per 1,000 emails)

- ✅ Scales infinitely

- ✅ Integrates with existing AWS infrastructure

- ❌ More complex setup (domain verification, DKIM, etc.)

- ❌ Requires dealing with AWS console complexity

- Decision: Skip for v1. Not worth the setup time.

Option 3: Postmark

- ✅ Great deliverability

- ✅ Clean API

- ❌ More expensive than SendGrid

- ❌ Smaller free tier

- Decision: Not needed. SendGrid is sufficient.

Final choice: Start with SendGrid. It's simple, well-documented, and we can always migrate to SES if email volume becomes expensive.

Notification Delivery

How should we send notifications?

Option 1: Synchronous (Recommended for v1) ✓

- ✅ Simple implementation

- ✅ Immediate feedback if email fails

- ✅ No queue infrastructure needed

- ❌ Slightly slower API response (adds ~200ms)

- ❌ Won't scale to thousands of notifications

- Decision: Good enough for v1. Most assignment operations are infrequent.

Option 2: Background Queue (Redis/BullMQ)

- ✅ Fast API response

- ✅ Handles bursts better

- ✅ Can retry failures

- ❌ Adds infrastructure complexity (Redis, worker processes)

- ❌ Harder to debug

- ❌ Overkill for current volume

- Decision: Wait until we actually need it. Don't over-engineer.

Final choice: Synchronous for v1. Send emails directly in the API handler. If response times become a problem, we'll add a queue in v2.

The important technical choices usually fall into two categories:

- Libraries/SDKs - Services you will install on your codebase (e.g.,

@sendgrid/mail,axios) - Third-party APIs - HTTP endpoints you call directly (e.g. Stripe API, Supabase REST API)

Pro tip: Use Reddit & Hacker News as Your BS Detectors

When the AI suggests libraries or tools, validate them on Reddit or Hacker News before committing:

Search: [tool name] reddit or [tool name] hacker news or [tool name] vs [alternative] reddit

Why these platforms? Anonymity breeds honesty. Developers on Reddit and Hacker News will tell you the unvarnished truth, the hidden gotchas, maintenance issues, and better alternatives. If a library has been abandoned for 2 years or has terrible developer experience, these communities will let you know. The AI might suggest something that technically works but is practically unusable.

PS: Avoid LinkedIn for technical validation. It's the worst place to search for honest tool reviews, everyone's polishing their professional image, promoting their company's tools, or building their personal brand. You won't find the brutal truth there.

Quick validation questions to check:

- Is this tool actively maintained?

- Does it involve trade-offs we are willing to accept?

- Does it lack certain functionalities?

- What are the costs associated with that library or API?

Step 3: Implementation Risks

The architect identifies technical rabbit holes and implementation risks:

Implementation Risks

1. Race conditions on concurrent assignments

- Risk: Two users assign the same task simultaneously

- Mitigation: Use database-level locking or last-write-wins (simpler for v1)

- Decision: Last-write-wins is fine. Concurrent assignment is rare.

2. Email delivery failures

- Risk: Email fails but API returns success

- Mitigation: Wrap email sending in try-catch, log failures

- Decision: Log email failures but don't block assignment. User still sees success in UI.

3. N+1 queries when loading task lists

- Risk: Fetching assignee data for each task separately

- Mitigation: Use JOIN or eager loading to fetch assignees in one query

- Decision: Must implement from the start. This is easy to get right now.

Deliverables:

architect_questions.json- Technical questions and answers (saved in architect/ folder)architecture_document.md- Contains architecture spec, technology choices, and implementation risks (in feature root folder)

🔄 Using Specifications with Cursor

Once you have these specifications, implementation in Cursor becomes straightforward. You have three main documents that serve as your source of truth:

- product_requirements_document.md - Product specs (what and why)

- design_document.md - Design specs (how users interact)

- architecture_document.md - Technical specs (how to build)

-

Load the context: Add relevant spec files to your Cursor chat using

@filename- Include the PRD for understanding the feature requirements

- Include the design document for UI/UX work

- Include the architecture document for technical implementation

-

Reference specific sections: Point Cursor to exact requirements

@architecture_document.md Implement the task assignment endpoint according to the specifications in the "API Endpoints" section -

Use acceptance criteria for testing:

@product_requirements_document.md Create tests for the edge cases defined in the "Acceptance Criteria" section -

Keep specs updated: As you discover edge cases during implementation, update the relevant document

- New edge cases → Update PRD

- UI changes → Update design document

- Tech stack changes → Update architecture document

Note on Context Engineering: These specification documents become a core part of your context engineering strategy (covered in Part 5). By referencing them with @filename during implementation, you provide the AI with clear, structured intent rather than relying on conversation history alone. This ensures the AI has the right context at the right time, making implementations more accurate and reducing back-and-forth.

Key Benefits of This Approach

Clarity: Everyone (human and AI) knows exactly what to build

Completeness: All three perspectives (business logic, UX/UI, technical architecture) are covered

Traceability: Every implementation decision traces back to a specification

Reusability: Specs become documentation and onboarding material

Faster Implementation: Less back-and-forth during coding because requirements are clear

Better Testing: Acceptance criteria for edge cases map directly to test cases

Scope Control: No-gos and rabbit holes prevent feature creep and over-engineering

When to Use This Workflow

This comprehensive three-agent workflow works best when working on complex features.

For quick UI edits or small improvements on existing features, you probably don't need this full process, it's likely overkill. Use default vibe coding or Cursor's Plan Mode instead.

The key is finding the right balance on the specification spectrum for your specific feature. Simple feature? Lighter specs. Complex feature with many edge cases? Full workflow.

Templates and Prompts

All the agent prompts, templates, and examples are available in the templates you can download here. The folder structure is organized as follows:

Main Agent Prompts (/agent_prompts/):

- Product Manager prompt (updated with job stories, no-gos, rabbit holes)

- UX/UI Designer prompt (with breadboarding and screen inventory concepts)

- Software Architect prompt (with technology choices and implementation risks)

- Tech Lead prompt (for task breakdown)

Specialized Prompts (/special_prompts/):

- Debugging agent prompt (for complex debugging scenarios)

- UX/UI expert prompt (for specialized design assistance)

Example Feature (/features_example/001-collaborative-task-assignment/):

- Complete three-agent workflow example

- Organized by role:

pm/,ux/,architect/,tasks/ - Product Requirements Document, Design Document, Architecture Document

- ASCII wireframe examples

- Task breakdown example

Drop these into your project and feel free to customize them for your specific needs.

Next up: Now that you have comprehensive specifications, Part 4 covers how to break them down into manageable implementation tasks. It is the last step before we can actually start writing code!

✂️ Part 4: Task Breakdown

This is a critical step you should not skip. If you've done all that hard work specifying your feature, it would be a shame to mess it up during the implementation phase.

The Problem with One-Shot Development

When you've written comprehensive specifications (like we did in Parts 3), you're dealing with complex features that shouldn't be built all at once. Asking an AI to implement everything in one go is like building your dream house and having the foundation crew, painters, and roofers all working on the same site simultaneously. It's chaotic and counterproductive. You need to build the foundation first, then the walls, then the roof, and so on.

The Tech Lead's role here is to transform our detailed specifications into bite-sized, actionable tasks that can be validated at each step by you, the human.

Two Approaches: TaskMaster vs Custom System

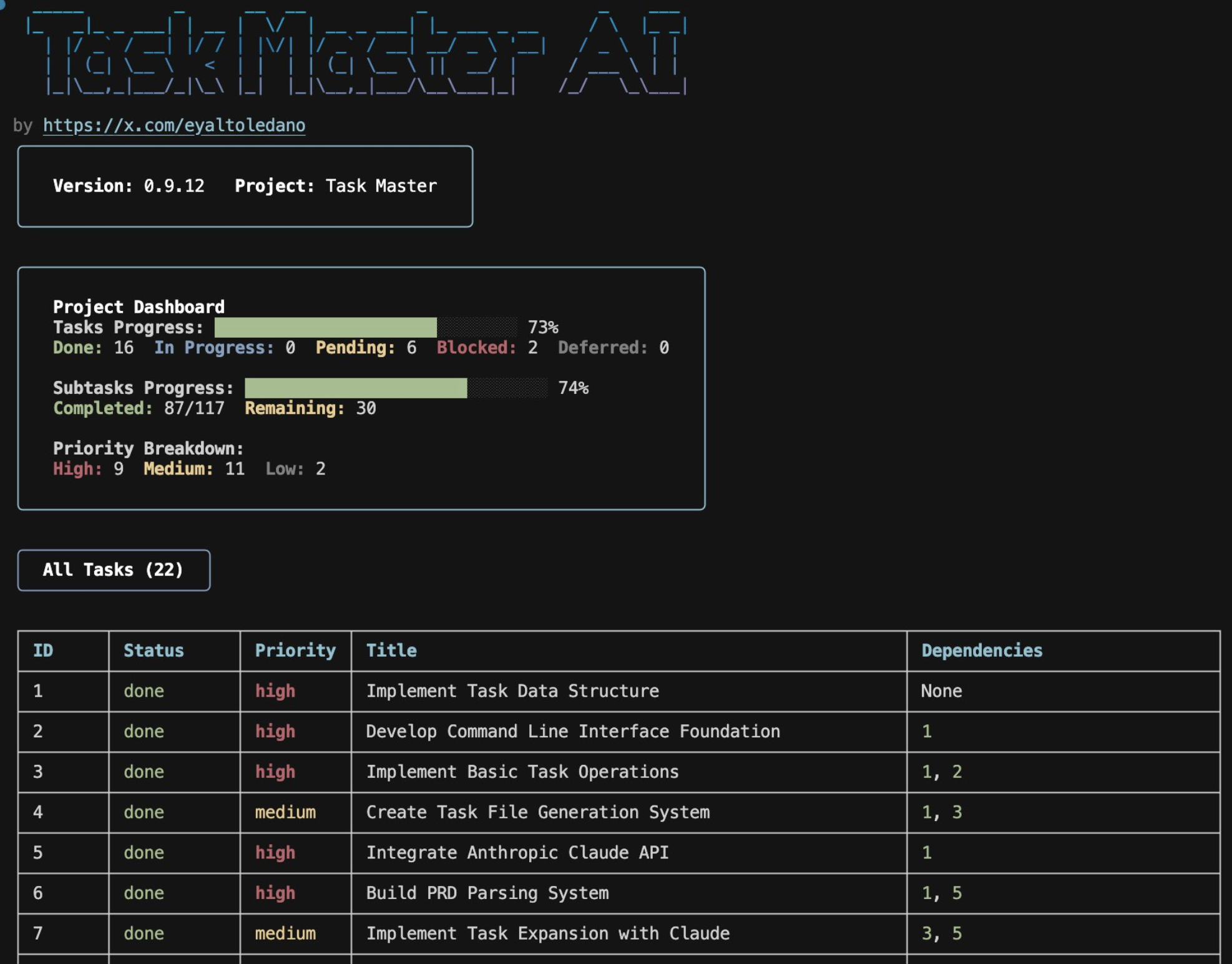

Option 1: TaskMaster

TaskMaster is an open-source project that automates task generation from Product Requirement Documents. It includes an MCP server that allows you to simply call "next step" or "next task" and it automatically knows what to work on next. I've used it extensively. It's effective and handles the complexity of task orchestration for you.

Option 2: Build Your Own System

You can create a simplified version, which uses a prompt-based approach. The templates I provide includes a Tech Lead agent prompt that breaks down specifications into specific, testable tasks.

Going DIY: A Simplified version of TaskMaster

For those who want to build their own system but need the core components, I provide a simplified version inspired by TaskMaster's architecture. This gives you the essential structure without the full complexity of their system. You can start with that and move to TaskMaster if you feel the need for something more sophisticated.

The Three Core Components

1. Tech Lead Prompt (agent_prompts/tech_lead_prompt.md)

- Located in the templates

/agent_prompts/folder - Acts as the "brain" that analyzes your specifications

- Breaks down complex features into manageable tasks

- Handles dependency mapping and risk assessment

- Can be customized for your specific domain or tech stack

2. Task Overview Structure (tasks/task.json)

- Single source of truth for all project tasks

- Contains the same structure as TaskMaster's

tasks.json

📋 Click to see example task.json structure

{

"meta": {

"projectName": "Your Project",

"version": "1.0.0",

"createdAt": "timestamp"

},

"tasks": [

{

"id": 1,

"title": "Setup Database Schema",

"description": "Create initial database tables and relationships",

"status": "pending",

"dependencies": [],

"priority": "high",

"complexity": 6,

"subtasks": [],

"testStrategy": "Verify schema with sample data",

"tags": ["database", "foundation"]

}

]

}3. Individual Task Breakdowns (tasks/[task-id].md)

- Each task gets its own detailed markdown file

- Contains step-by-step implementation instructions

- Includes acceptance criteria and testing guidelines

- Follows the same format as TaskMaster but simplified

📝 Click to see example task breakdown

Task 1: Setup Database Schema

Overview

Create the foundational database structure for the user management system.

Steps

- Create users table with email, password_hash, created_at

- Create sessions table with user_id, token, expires_at

- Add foreign key constraints

- Create indexes for performance

Acceptance Criteria

- Users table created with all required fields

- Sessions table properly linked to users

- All constraints and indexes applied

- Sample data can be inserted successfully

Testing Strategy

Run migration script and verify with sample data insertion.

How It Works Together

- Input: Your comprehensive specifications (PRD, design document, architecture document)

- Processing: The Tech Lead prompt analyzes everything and generates structured tasks

- Output:

tasks/task.jsonwith the complete task overview- Individual

tasks/[id].mdfiles for each task's detailed breakdown

- Implementation: You work through tasks in dependency order, marking them complete as you go

This simplified approach gives you 80% of TaskMaster's benefits with 20% of the complexity, perfect for teams who want systematic task breakdown without having to install and learn a new tool.

The Two-Axis Breakdown Strategy

The Tech Lead agent breaks down scope across two critical axes:

1. Behavior Axis: Don't implement all user behavior at once

- Start with core user flows

- Add edge cases incrementally

- Build validation step by step

2. System Axis: Follow the natural development progression

- Data Model → Backend Logic → Frontend Components → UX Polish

- Each layer builds on the previous one

- Dependencies are clear and manageable

The Human-in-the-Loop Advantage

Here's where the magic happens. After each task completion, you can:

- Spin up

localhostand test the current milestone - Validate the implementation matches your expectations

- Catch issues early before they compound

- Course-correct immediately if something feels off

This prevents the nightmare scenario where the AI builds everything and you discover fundamental problems at the end. If the data model is wrong, you catch it after the first task, not 20 tasks later when it's already connected to your UI and you now have to untangle an absolute mess.

Why This Matters for Complex Features

If a feature is simple enough to implement in one shot, you probably don't need all these specifications. But if it's complex enough to require detailed PRDs, user stories, and technical architecture, then it's definitely complex enough to benefit from systematic task breakdown.

The goal is creating testable milestones where you can bring human judgment into the loop and ensure the AI stays on track.

🧩 Part 5: Context Window

Finally!

We now move into the implementation phase. We have great specifications, clear requirements, and a solid breakdown of how to build our feature. The AI model is ready to start working on the code.

Good specifications and detailed breakdowns are essential for good implementation, but there's a third element that really matters: context engineering aka how you manage what information the AI actually sees when it's working.

Context Window Management

Two key aspects affect context engineering when vibe coding:

Quantity (How Much Context)

The amount of content you provide matters. Breaking down tasks specifically should already help with this, but you need to be deliberate about what to include.

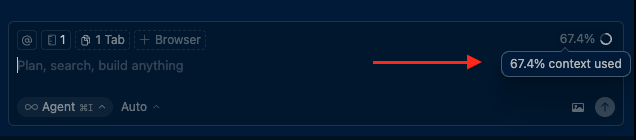

Here's a practical rule of thumb for Cursor's context window:

Cursor's context size indicator

Cursor's context size indicator

When you see the context indicator creeping above 50% of the total context window, you have two options:

- Start a new chat - This gives you a fresh context window for new features

- Use the

/summarizecommand - This can help reduce the context window by condensing previous conversation history

Pro tip: Start a new chat for each new feature. Every message adds context to the AI, and old context can distract the model on new features, leading to worse performance overall. If you're working on a feature and wish to keep the context from the previous chat but the context window is already used above 50%, use the /summarize command in Cursor.

Quality (How Good the Context Is)

Quality matters just as much as quantity. Outdated or poorly formatted context can actually confuse your LLM, in just the same way it would confuse a developer.

Here's the tricky part about bad context: the AI won't tell you it's working with outdated or wrong information. It will confidently generate code based on whatever you give it. Bad context doesn't lead to "I don't know" responses—it leads to confident but wrong solutions that waste your time debugging.

The Two Areas of Context

The context provided to the model consists of two main parts:

1. Existing Codebase (Already Handled)

Modern AI coding tools like Cursor handle this exceptionally well. They use advanced retrieval systems and vectorize your entire codebase, automatically finding:

- The right files to edit

- Related components and dependencies

- Existing patterns and conventions

- Code that needs to be updated or removed

You don't need to manually manage this - the tools are already quite good at finding the right context from your codebase. For more details on how Cursor handles codebase indexing, see the official documentation.

2. External Context (Your Responsibility)

This is where you need to be deliberate about what context you provide. External context breaks down into three critical types:

- Documentation - Latest API docs, library documentation, and framework guides

- Logs - Console logs, server logs, and error stack traces

- Visual Feedback - Screenshots, recordings, and visual context for UX/UI work

In order to provide such external context, we're going to use the infamous MCPs (Model Context Protocols).

Understanding MCPs

Before we dive into the different types of external context, it's important to understand MCPs (Model Context Protocols). Invented by Anthropic, MCPs are a standardized way for AI coding assistants to connect with external tools and data sources. Think of them as bridges that allow your AI to access real-time information, interact with browsers, scrape websites, and perform automated tasks that weren't possible before.

MCPs have quickly become the standard for extending AI capabilities, but with thousands of MCPs now available, it's easy to get overwhelmed.

For each use case, I only recommend a handful of MCPs. Just pick one. Rather than having 50 MCPs installed, you'll have a much better workflow with a handful of high-quality, thoroughly tested tools that get the job done really well. The MCPs I recommend below are the ones I've extensively tested and can confidently recommend for production use.

Let's explore each of these in detail:

📚 Documentation (Keep it Current)

Why it matters: Your model's training cutoff might not include the latest documentation for your stack. Having current API docs, library documentation, and framework guides is crucial.

Best practices:

- Reference the latest version of your framework's documentation

- Include specific API endpoints and their current parameters

- Pull in migration guides when working with framework updates

- Use MCPs (Model Context Protocols) to fetch real-time documentation

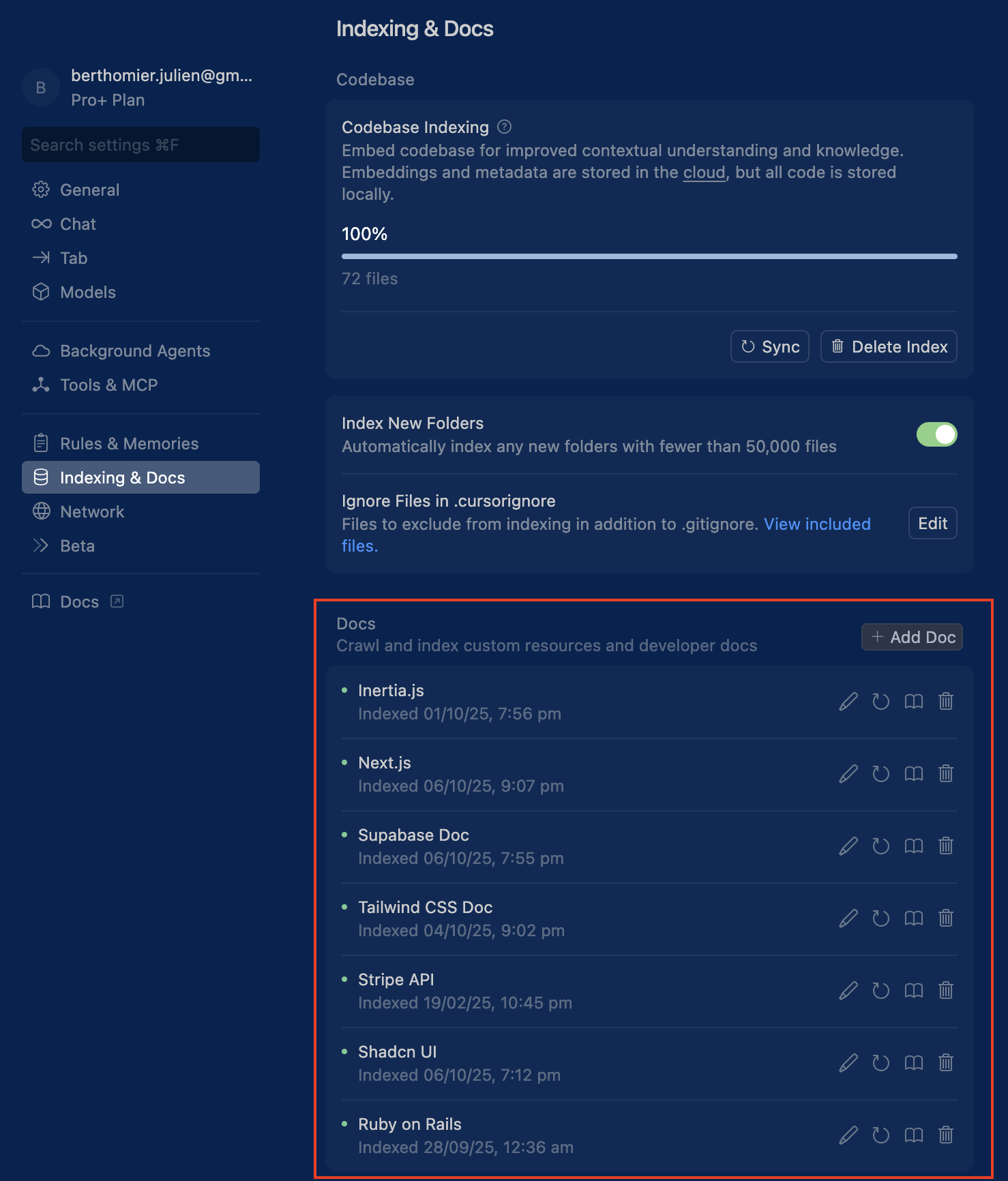

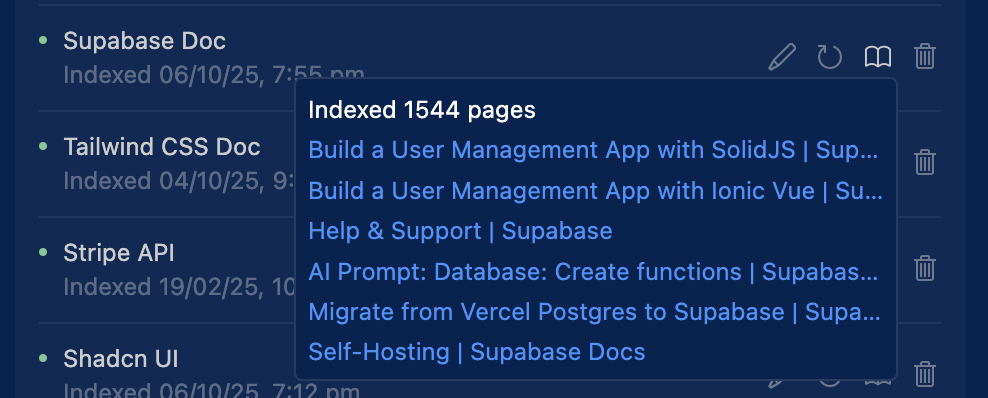

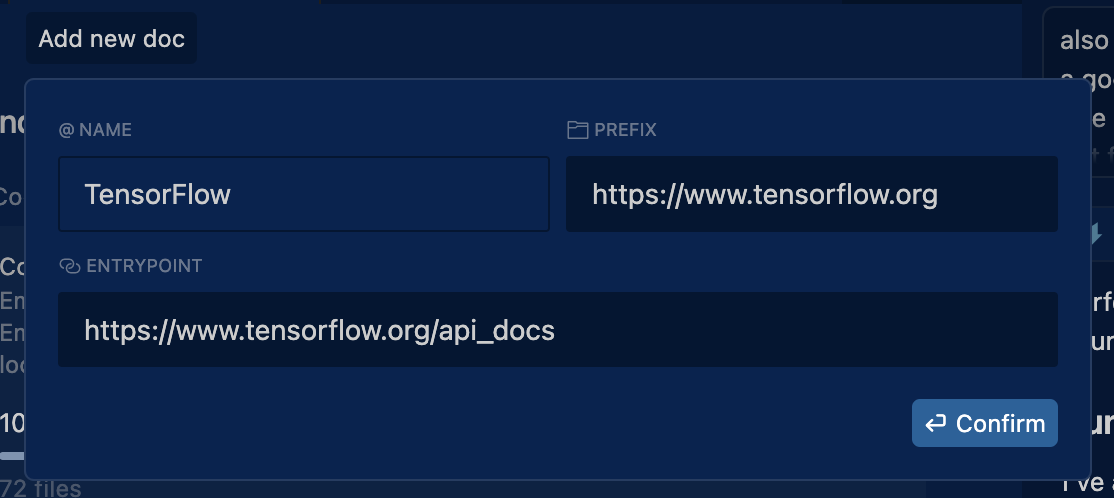

Adding Framework Documentation to Cursor

Before diving into MCPs for external documentation, you should first add the documentation for your key frameworks and libraries directly to Cursor. This gives you immediate access to official docs without needing external tools.

How to add documentation in Cursor:

- Go to Cursor Settings → Indexing & Docs → Docs

- Add documentation URLs for your stack. For example:

- Next.js:

https://nextjs.org/docs - React:

https://reactjs.org/docs - Supabase:

https://supabase.com/docs - Tailwind CSS:

https://tailwindcss.com/docs - shadcn/ui:

https://ui.shadcn.com/docs

- Next.js:

- Use in your code by referencing

@docsfollowed by the topic:@docs useEffect- pulls React useEffect documentation@docs supabase auth- pulls Supabase auth documentation@docs nextjs routing- pulls Next.js routing documentation

This integration allows Cursor to fetch and display official documentation within your editor, reducing context switching and ensuring you always have the latest docs available. For more details, see Cursor's documentation management guide.

Example for a typical stack: If you're using Next.js + React + Supabase + Tailwind CSS + shadcn/ui, add all five documentation sources. This way, when the AI is working on your code, it has immediate access to the exact API patterns, component props, and best practices for your specific stack.

Pro tip: Learning from Open Source Projects

When building a feature inspired by an open-source project, use Gitingest to turn any GitHub repository into a prompt-friendly text digest that Cursor can reference. This is incredibly helpful for understanding architectural decisions and implementation patterns from projects you admire.

Use case: You see a feature you like in an open-source tool (e.g., Excalidraw's canvas interaction, FastAPI's routing pattern) and want to replicate it in your project. Instead of manually browsing the repository:

- Go to Gitingest and paste the repository URL (or replace 'hub' with 'ingest' in any GitHub URL)

- It generates a text digest of the entire codebase

- Save it as a file to reference it in Cursor

- The LLM can now understand how they implemented the feature and suggest the best architecture for your use case

For a more powerful approach, you can turn any GitHub repository or documentation site into a dedicated MCP server using GitMCP. This gives your AI assistant persistent, contextual access to the entire codebase.

This is especially powerful when you're frequently referencing a specific framework or library, as the AI can pull context from the source code directly without you needing to manually copy and paste sections.

This dramatically speeds up learning from existing codebases and helps your AI assistant provide better architectural recommendations based on proven patterns.

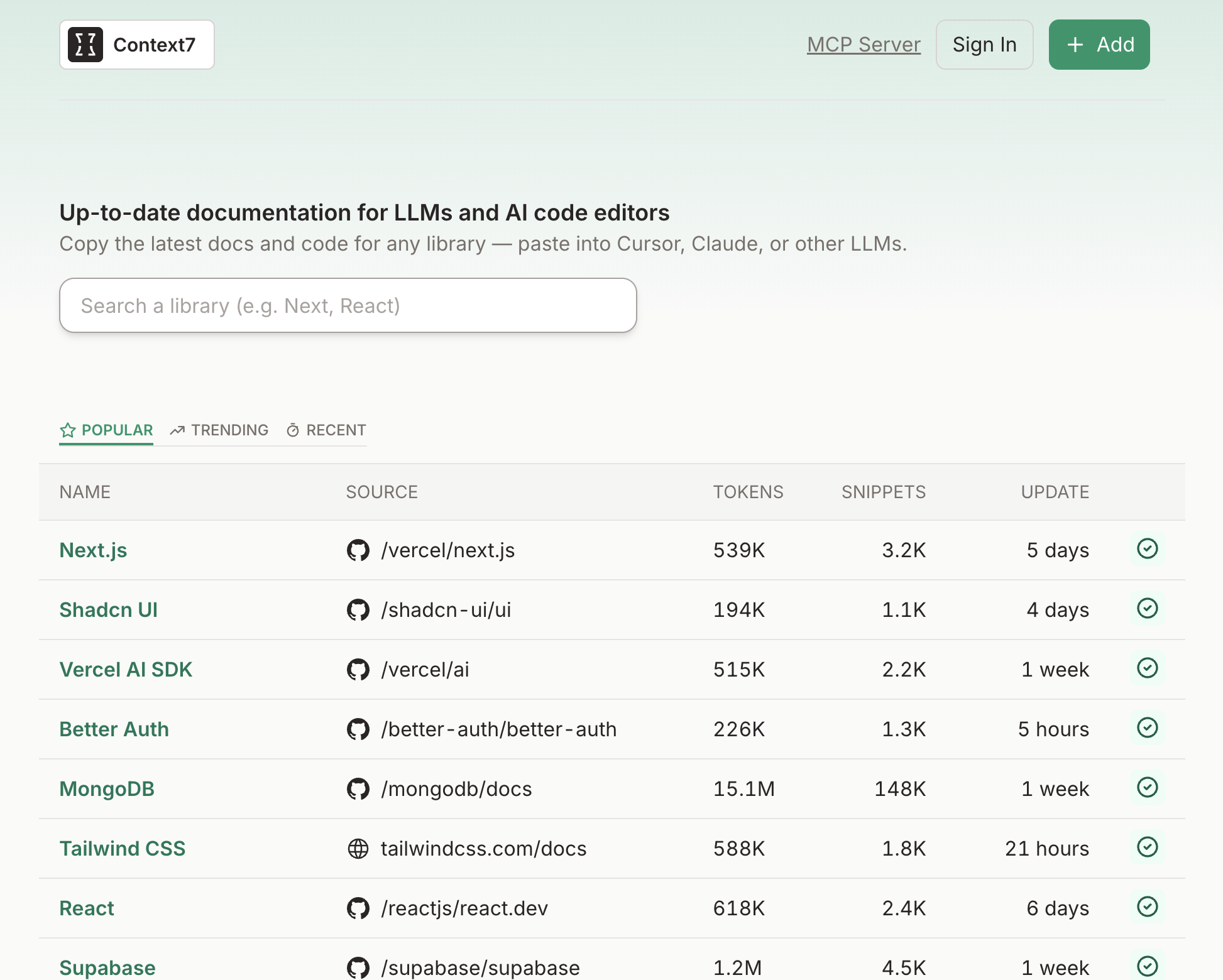

Recommended MCPs for Additional Documentation:

🏆 Context7

For fetching up-to-date library and API documentations. See their (MCP Server)

🏆 FireCrawl

For scraping and extracting content from documentation websites. See their (MCP Server)

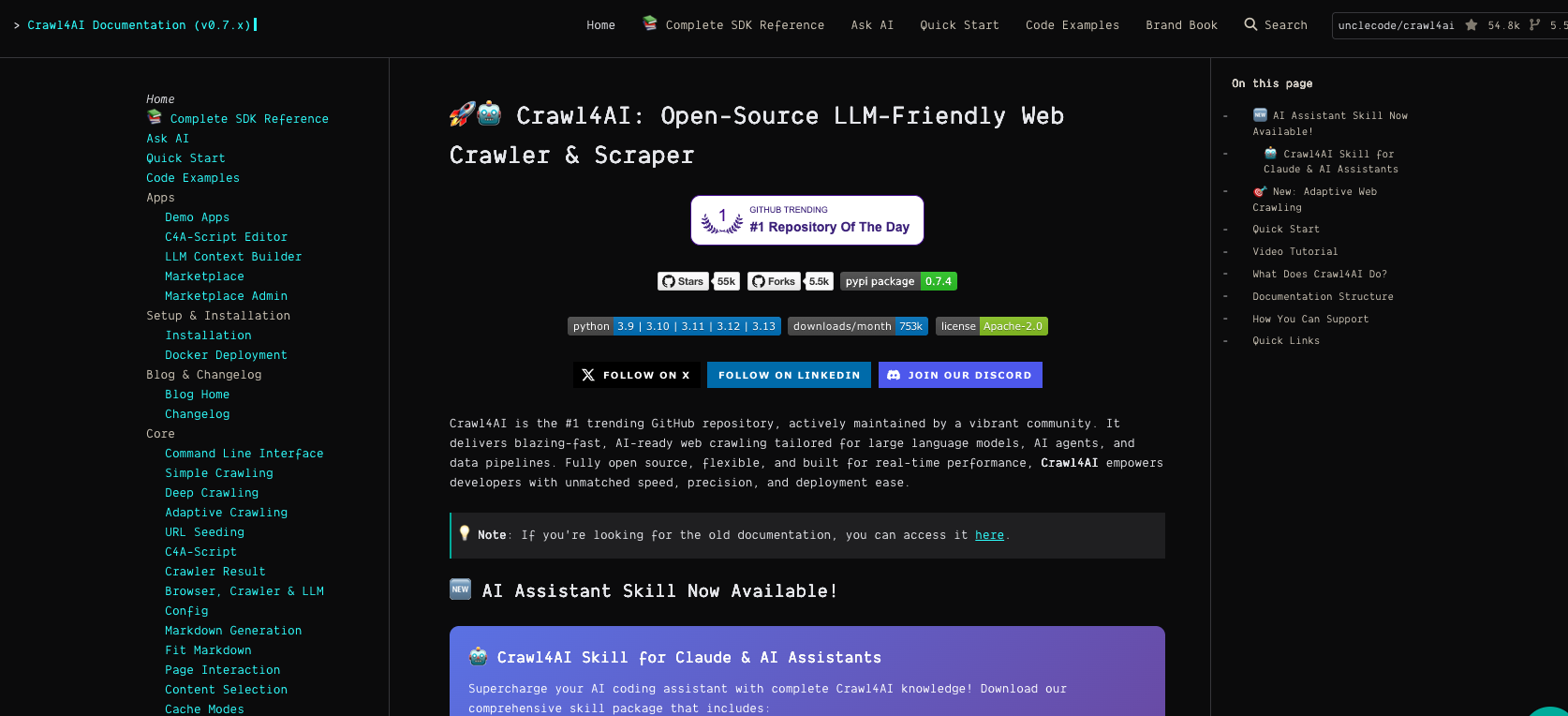

🏆 Crawl4AI

A fully open-source LLM-friendly web crawler and scraper for advanced data extraction. Unlike Context7 or FireCrawl, Crawl4AI gives you complete control and customization but requires significantly more setup and technical knowledge. Perfect for developers who want to self-host their crawling infrastructure or need advanced extraction capabilities. Check their documentation to learn how to integrate it as an MCP server.

Note: This option is recommended for teams with technical expertise who value full control and customization over ease of use.

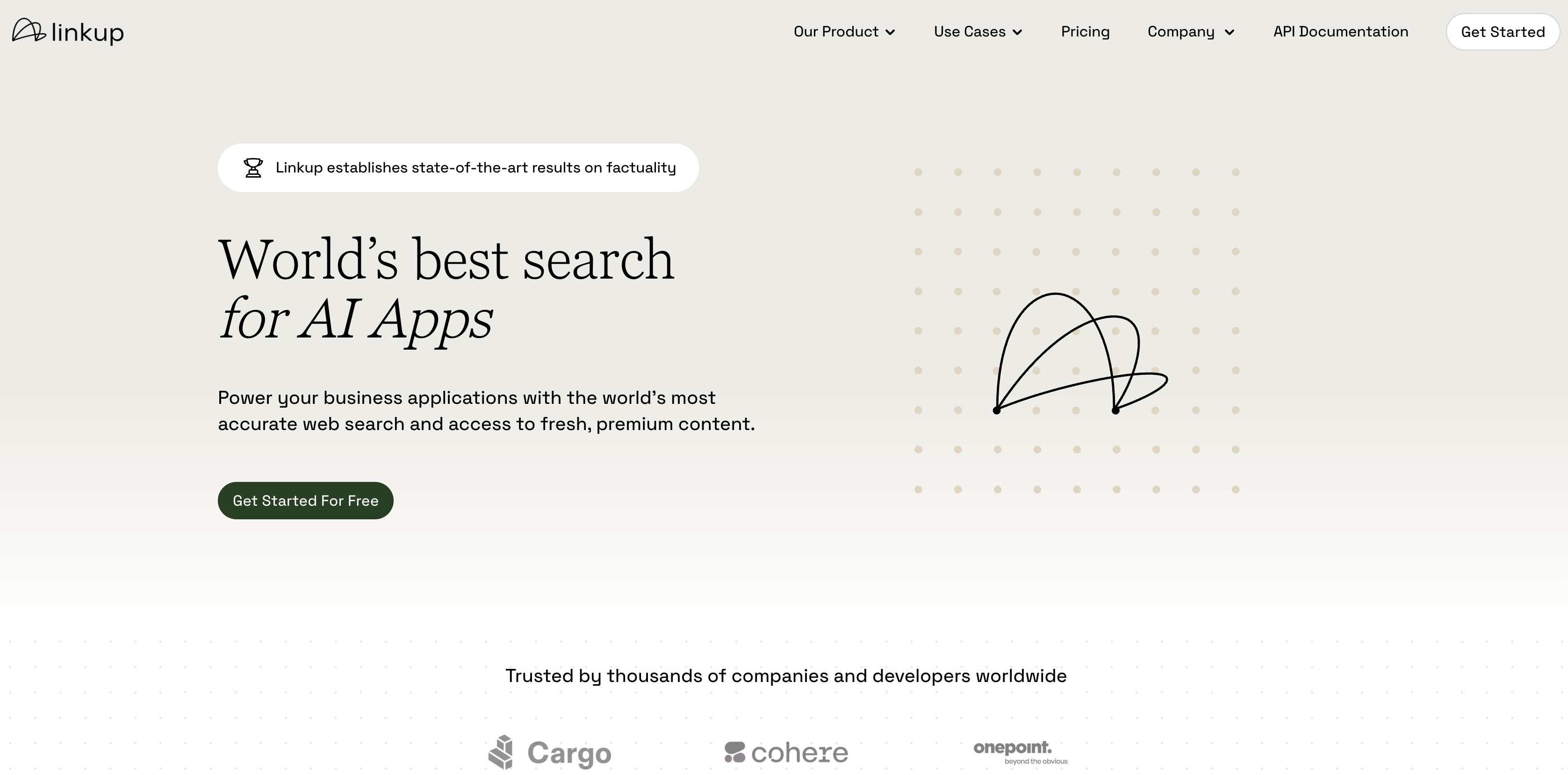

🏆 Linkup

For real-time web search and accessing the latest documentation. Ranks #1 on OpenAI's SimpleQA factuality benchmark with AI-optimized search that provides structured responses and source attribution. See their MCP Server for Claude.

🏆 Perplexity MCP

For AI-powered search with cited sources. Perplexity excels at answering technical questions with source attribution, making it ideal for finding current best practices, comparing libraries, or researching implementation approaches when documentation alone isn't enough.

🔍 Logs (Show the Symptoms)

Why it matters: When debugging, the AI needs to see the actual error messages, stack traces, and console output to understand what's going wrong.

Best practices:

- Share both frontend console logs and server logs

- Include the full error stack trace

- Show network request/response details when relevant

- Capture logs at the moment the issue occurs

Recommended MCPs for Logs:

🏆 Chrome DevTools MCP

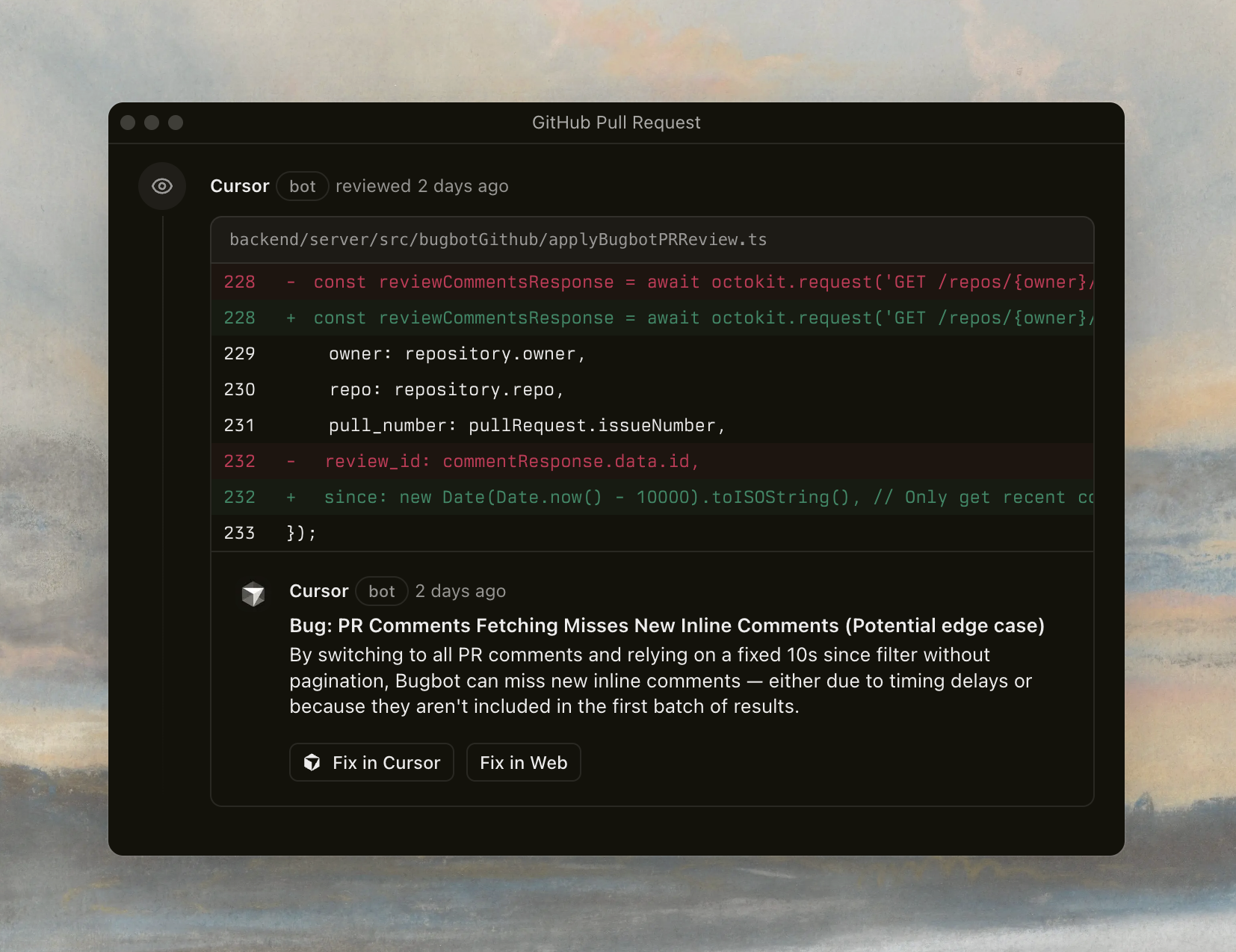

For comprehensive browser debugging, performance analysis, and automated testing. Provides direct access to Chrome DevTools capabilities including console logs, network requests, performance traces, and browser automation. Essential for debugging frontend issues, analyzing performance bottlenecks, and testing user interactions. The good part: it is maintained by Google and the Chrome DevTools team.

🏆 Playwright MCP

For comprehensive browser automation and end-to-end testing. Particularly powerful when you need to test specific user flows and navigate through your application to verify functionality. Allows you to automate complex user interactions, form submissions, and multi-step workflows. Essential for validating complete user journeys and ensuring your application works as expected from a user's perspective. The good part: it is maintained by Microsoft.

🏆 Browser MCP

For browser automation using your actual logged-in browser sessions. Unlike Playwright which launches fresh instances, Browser MCP connects to your existing Chrome browser via an extension. This means the AI can navigate websites where you're already logged in, avoiding bot detection and CAPTCHAs. Perfect for testing authenticated user flows or debugging issues that only appear when logged in.

❌ Browser Tools (Not recommended anymore)

I used to recommend this tool, but with Chrome DevTools MCP now available, it no longer makes sense. There are two main issues:

- You have to download a Chrome extension that is not verified by the Chrome store for it to work.

- It doesn't seem to be maintained anymore; it's been many months since the project was last updated.

🎨 Design (Give it Eyes)

When building products, you'll encounter design needs across five main categories. Each requires different tools and approaches. Here are the top recommended MCPs for each category based on extensive testing.

The Five Categories of Design Needs

When working on UX/UI, you typically need help with:

- UI Components - Buttons, forms, layouts, and interactive elements

- Illustrations - Custom graphics, empty states, loading states, onboarding visuals

- Images - Generated images, backgrounds, hero sections

- Icons - Interface icons, feature icons, navigation elements

- Visual Feedback - Annotations, UI reviews, and bulk feedback on existing interfaces

Let's cover the best MCPs and approaches for each:

1. 🧩 UI Components

When building interfaces, you need design inspiration and pre-built components that match modern UI patterns.

Recommended MCPs:

🏆 Magic UI MCP (If using Magic UI library)

If you're already using Magic UI as your component library, their MCP provides direct access to their component ecosystem. Ideal for teams committed to the Magic UI design system.

🏆 Magic MCP from 21st.dev (Library Agnostic - Recommended)

This is the most versatile option for UI component generation. Magic MCP is library-agnostic, meaning it works with any UI framework you're using (shadcn/ui, Material-UI, Chakra UI, etc.).

When to use it:

- Designing landing pages and need inspiration for hero sections, pricing tables, or feature grids

- Building product interfaces and want modern UI patterns for dashboards, forms, or modals

- Need quick component iterations without leaving Cursor

- Want to explore different UI approaches before committing to a design

Why it's powerful: It's like having v0.dev directly in your Cursor workflow, generating production-ready React components that integrate with your existing codebase.

Pro tip: When Words Fail, Show Don't Tell

Sometimes you know exactly what design you want but struggle to describe it. Instead of fighting with prompts, use these resources:

Design Inspiration (screenshot and reference them in Cursor):

- Dribbble - High-quality UI designs from professional designers